The LFS Cache tries to look up the file by its pointer if it doesn't have it already, it requests it from the remote LFS Store. It will then ask the local LFS Cache to deliver it.

Whenever Git in your local repository encounters an LFS-managed file, it will only find a pointer - not the file's actual data.

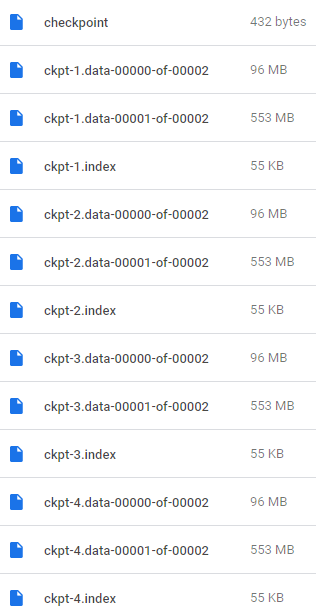

But what about the other versions of an LFS-managed file? Pointers Instead of Real Dataīut what exactly is stored in your local repository? We already heard that, in terms of actual files, only those items are present that are actually needed in the currently checked out revision. If you switch branches, it will automatically check if you need a specific version of such a big file and get it for you - on demand. Instead, it only provides the files you actually need in your checked out revision. The LFS extension uses a simple technique to avoid your local Git repository from exploding like that: it does not keep all versions of a file on your machine. And, as already mentioned, most of this data will be of little value: usually, old versions of files aren't used on a daily basis - but they still weigh a lot of Megabytes.

Linux install git lfs download#

When a coworker clones that repository to her local machine, she will need to download a huge amount of data. After a couple of iterations, your local repository will quickly weigh tons of Megabytes and soon Gigabytes. When you make a change to this file (no matter how tiny it might be), committing this modification will save the complete file (huge as it is) in your repository.

Let's say you have a 100 MB Photoshop file in your project. An LFS-enhanced local Git repository will be significantly smaller in size because it breaks one basic rule of Git in an elegant way: it does not keep all of the project's data in your local repository. This problem in mind, Git's standard feature set was enhanced with the "Large File Storage" extension - in short: "Git LFS". Most annoyingly, the majority of this huge amount of data is probably useless for you: most of the time, you don't need each and every version of a file on your disk. Working with large binary files can be quite a hassle: they bloat your local repository and leave you with Gigabytes of data on your machine. If your ‘merge’ contains locked files that you didn’t lock it will be blocked.Learn on: Desktop GUI | Command Line Language: EN Handling Large Files with LFS If your ‘push’ contains locked files that you didn’t lock it will be rejected.

Linux install git lfs how to#

Locked files can only be unlocked by the person who locked them (see below for how to force unlock files). Once a Git LFS file has been registered as ‘lockable’, it can be ‘locked’ to stop others from editing it while you’re working on it. This can be helpful if you work with large binary assets that can’t be merged.Įach file can only be locked by one person at a time. You can add multiple patterns this way, and you can see the patterns being tracked using the command, git lfs track. Now when you add file names that match the pattern and they will automatically be handled by LFS. You should push the changes whenever you change what files are being tracked so everyone who clones the repo has the tracking patterns.

0 kommentar(er)

0 kommentar(er)